NIMBY Rails devblog 2023-08

More optimized drawing

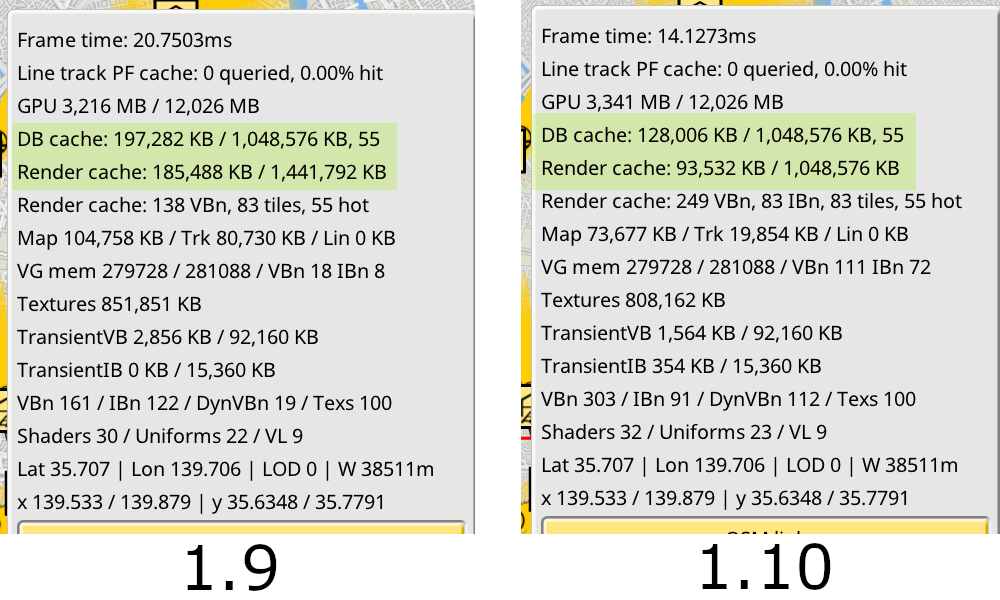

Last month, as preparation for the new map, I started optimizing map line drawing to make it consume less RAM, plus some optimizations for area polygons. It was working very well so I decided that rather than jumping into the new map tasks, I would continue until all old drawing code was replaced with new code, using the same optimization ideas as the line drawing. This involved reviewing how every every mesh was stored and rendered, and it also converted track rendering. After two weeks of work I managed to cut overall memory usage by 50%, while also making the rendering faster:

This example is taken from a complex save with many thousands of track segments in the middle of Tokyo, zoomed just at the limit of LOD 0, so it’s the worst possible case from a pure rendering POV. There’s not much more to optimize at this point, other than going into procedural meshes and compute shaders. And these are kind of just hiding the RAM usage, since they require transient memory buffers for their output.

New 1.10 world map, everything redone from scratch

Then it was time to start for real with the 1.10 major task of updating the map. For the good of the game and my development process, I decided against using the old conversion system which requires expensive servers with hundreds of GB of RAM, and instead try to come up with a new, slower process which can be done on a lower spec PC but can still be completed in under a few days. The reason for this change is that this time I wanted to be more capable of analyzing map outputs on larger scale samples without renting a server while I develop. While renting it for a couple days for the final export is okay, having it running for a month or more gets too expensive very fast.

The last map addition, which added the new population layer, was already halfway there, requiring “only” 128GB of RAM, and it’s less than 50 EUR/month to rent a bare metal server of this size. But I still wanted to see if it was possible to do it on 32GB RAM on a humble i3-12100 I have set up as a home server.

So far it’s working, but I haven’t yet attempted a full planet OSM conversion, so it’s still entirely possible I will fail, so the conversion process needs to be prepared to be reinstalled anywhere, quickly and cleanly. I accomplished this in the past with Docker, but this time I went with Nix Flakes. They are a much more developer oriented tool and make dependency resolution a central part of the toolset, rather than having to roll my own inside a container.

For the conversion process itself, I am throwing away all the 1.1 code, and keeping some of the 1.3 code. In particular everything that has to do with OpenStreetMap is rewritten from scratch, and I am analyzing how every step of loading OSM data and converting it into a database of objects, and then into a rendering optimized tile database, is performed. The goal is to realize a higher level of optimization, so the resulting game map file is either smaller, or if similar or larger size, it contains more OSM data and at higher fidelity than before. I want to include a lot more OSM tags, even if the game by default is only using a fraction of them. They could be useful in the future or exposed to modders in some way. Do you have very strong opinions about the game not using different textures for natural=shingle and natural=scree? Please feel free to mod them, the data will be there.

But there’s some data that won’t be in the map file. In particular buildings are going to be left out, again. In the past 3 years the building data in OSM has improved immensely. The adjective here is doing double duty, since buildings are now approximately half of the OSM database by size. But, despite this improvement, it’s still easy to browse a bit and find medium towns with no mapped buildings, or cities missing some areas. If I’m going to make the game map file 2x larger it has to be for a good reason, and if I include buildings, I will immediately get “bug reports” about missing buildings in many places. So rather than explaining 1000 times that my own sources are incomplete, while at the same time making the file much larger, I am not including the data. Railway infrastructure, and any metadata related to transit systems is also stripped out, this time for gameplay reasons. Exposing that data in the map immediately creates a “need” to make it playable, which I don’t want to do. OSM data is optimized for display and it’s not structured in any way that is usable as a track by the game, for example.

With these decisions made, I implemented a minimally capable system for the intermediate object database. Learning from the 1.3 importer I made the spatial object index to just be the equivalent of locating objects in the in-game tile grid, realizing a huge speedup. This time the intermediate database is also my own custom file format, which is optimized to locate any object in the file in just two file reads with a hash table, rather than the usual binary tree. It’s not useful for much else, but it does not need to be.

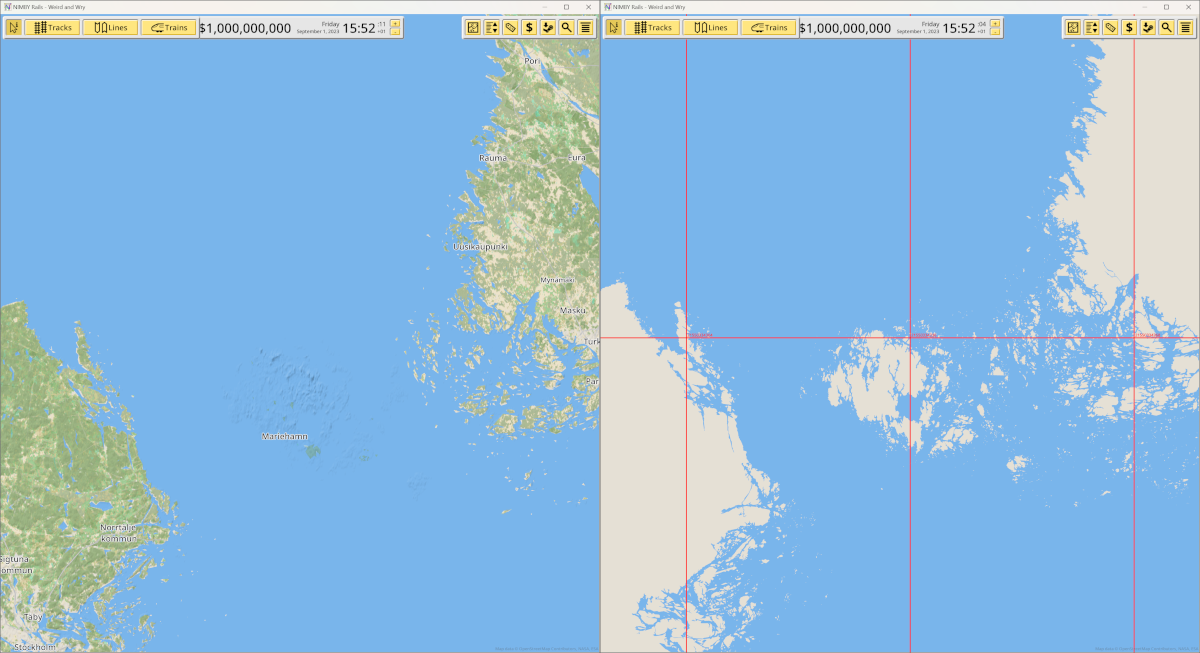

Before attempting full planet imports and map builds, I decided to start with the most complex OSM object: coastlines and seas. Coastlines are the monsters of the OSM map. If put in a relationship they would have millions of nodes. Instead of using the planet OSM coastlines I use a dataset of pre-made, pre-cut water polygons which are easier to work with, although they come with their own set of challenges. For example, the tile pyramid has multiple levels of detail, and less detailed levels require a reduction in the quality of the polygon (this is just the number of nodes). Depending on how that is done, seams and thin blank areas will appear when you zoom out. So the simplification process has to be aware of this and respect the “extreme” points when possible. Another issue is that these polygons might be smaller but they are not tiny, and they still might be extremely complex, specially around archipelagos. These polygons have holes in them, and triangulating them must be done carefully after their points have been simplified, to avoid creating impossible geometries. This happened to the Åland Islands, which are broken in 1.9 but already corrected in 1.10:

It’s still early days for the 1.10 map rebuild process, but all the foundations are now in place to expand it to more data. 1.10 will take longer to develop than the last 3 major releases done in 2023, but it’s important it’s done right, since ideally its beta series should not involve having to download a 20GB+ file more than once.