NIMBY Rails devblog 2020-07

Internal development notes, very slightly cleaned up and commented a month later.

TL;DR: networked train simulation, solution for traffic jams, procedural buildings research

Multiplayer: 80% of the way there

The first half of July was devoted to continuing the huge effort of bringing up multiplayer for all the game systems. With the database-like part of the game state done in June, now it was time to focus on the simulation-like part, which is much more like traditional multiplayer networking as understood in videogames.

The database-like synchronization is about sending the minimal set of changes that happen in a frame to a subset of the objects. The total of objects can be in the hundreds of thousands, but since players can only realistically modify a few dozens of them per frame, the traffic and processing is kept low. It was still a huge challenge to implement and to keep the code looking sane and not too disturbed by the need to finely track changes (double buffering and comparing changes between frames was never considered; as stated, the goal is at least hundreds of thousands of objects, ideally millions of them).

The simulation synchronization instead deals with a set of objects that change on every logic frame (the trains, and others in the future, like the passenger simulation). I don’t anticipate players trying to make more than tens of thousands of trains and stations. But even such an extreme must be considered and planned for.

Fortunately, synchronizing a deterministic simulation with fixed timestep in a client-server game is a very well explored area and there’s a variety of strategies. Since this game simulation has virtually zero player input to disturb it (it’s trains on rails), it is possible to implement one of the simplest strategies: state synchronization with a jitter buffer. In this strategy the server is the only source of truth, but the clients are just as capable of running the entire simulation, and constantly do, except they are delayed on purpose by a number of frames. The server then sends the entire state of its simulation from time to time, along with a frame number, and the client stores it in a buffer. When the client arrives to the corresponding frame number, it discards its locally simulated state and uses the server sent one from the buffer, which then becomes the next base state to keep the local simulation going.

Since this simulation is so deterministic I can also just avoid sending a huge amount of potential state messages. Basically the server only tries to send a single update per train per second, instead of per frame. This means some trains in the clients may be up to one second outdated if something is not properly simulated in the client (the rare player action that changes the simulation, like switching train lines), but it’s a train simulator, not Counter Strike.

Networking with Steam Networking Sockets

Months ago I prepared the lowest level networking abstraction in the game to be independent of the OS, so I could plug any networking library. The game now expects to be able to send messages of arbitrary size to peers, both reliable (fully received, without errors, and in order) and unreliable ones. Up until now I was wrapping the dead simple znet library with this low level abstraction, to use plain TCP sockets. In July I also added an implementation using Steam Networking Sockets, which has two main advantages: the game can use the Steam relay network to avoid routing problems with ports, and it has actual support for both reliable and unreliable messages over UDP. The original znet TCP code will be kept for development purposes, and the release version of the game will use Steam Networking Sockets.

MVP solution for traffic jams

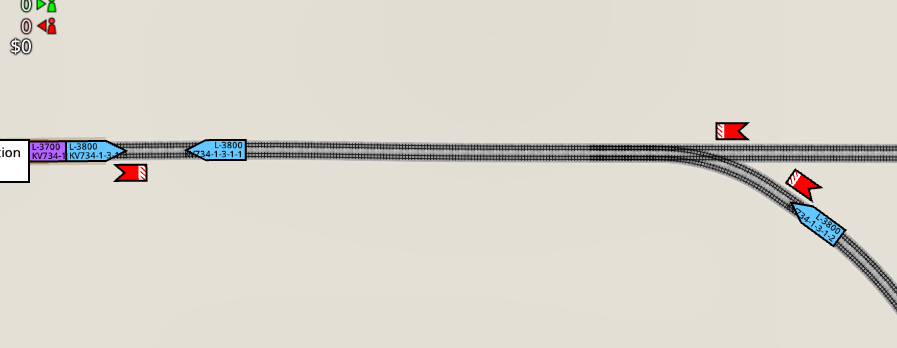

The game will need traffic signals. There is no way around it. And along with them, it’s also a very high chance I will have to offer optional single track mode, otherwise many signal constructions are not possible. But in the meantime I need to keep going with other items that have higher priority, so I implemented a much simpler system to coordinate access to branched tracks:

Basically, potentially congested tracks (branch parents and children) are flagged as locked or not. They get locked when any train is on top of them. Trains already on a locked track can roll into any kind of track, but trains on an unlocked track cannot roll onto a locked track and must wait for it to become unlocked. Deadlocks are avoided by the deterministic order of evaluation of train circulation (some trains always win because of their lower id, and given the same conditions, the same train always wins). This very simple system is enough for now, and successfully keeps branches usable. It’s also inefficient, but given the only possible kind of branch available (for now) in-game, it’s not much more inefficient than real life tracks of the same design. Real high flow designs like flying junctions will only be possible with single track branching and flexible signals.

Speaking of signals, I seized the opportunity to test some visuals for them, and to communicate the user that branch(ed) tracks are now under some level of signal control, even if they cannot be changed by the user.

Researching procedural building generation

More than a year ago I had to remove buildings from the OSM map in the quest of trying to fit it under 20GB. They also have the problem of spotty coverage. Many areas have decent road/street map but zero buildings, even adjacent areas in the same city. I had an item in my “future research” list for a long time to look into procedurally generating buildings. Now that I am starting to think about population simulation, the item became more relevant, since it could be an elegant way to tie simulated population with simulated buildings. So I decided to invest a couple of days into researching procedural building generation.

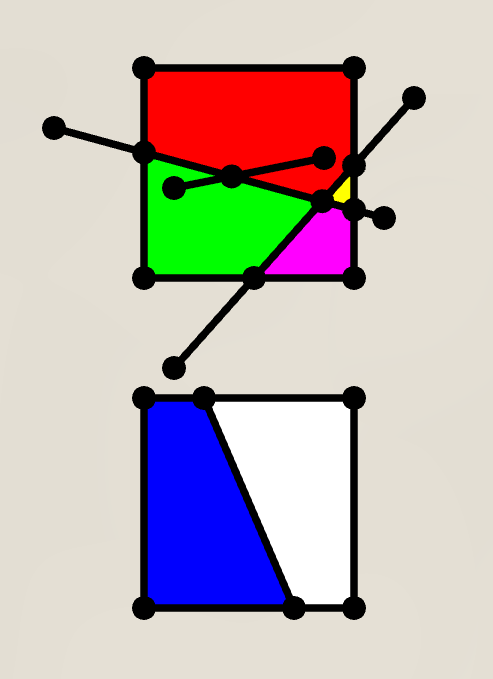

Whatever I do, it must adapt to the existing street grid. But said grid is no grid at all. The OSM vector data is more like a polyline soup, nothing like the graph-like structure I would need for a robust, deterministic, analytical solution. So the first item is to extract a clean connectivity graph from the arbitrarily linked, overlapping, potentially duplicated OSM polyline data. And then from that data, find the enclosed areas by walking the edges in CCW winding order:

This test image shows a bunch of polylines reproducing some of the pathologies I would later find in the real map data. Colinear points and segments, segments intersecting in any possible way, dead ends, disconnected graph areas, the outer area, etc. OSM is optimized for display, not for creating a connectivity graph, but that is my goal, and I more or less succeeded:

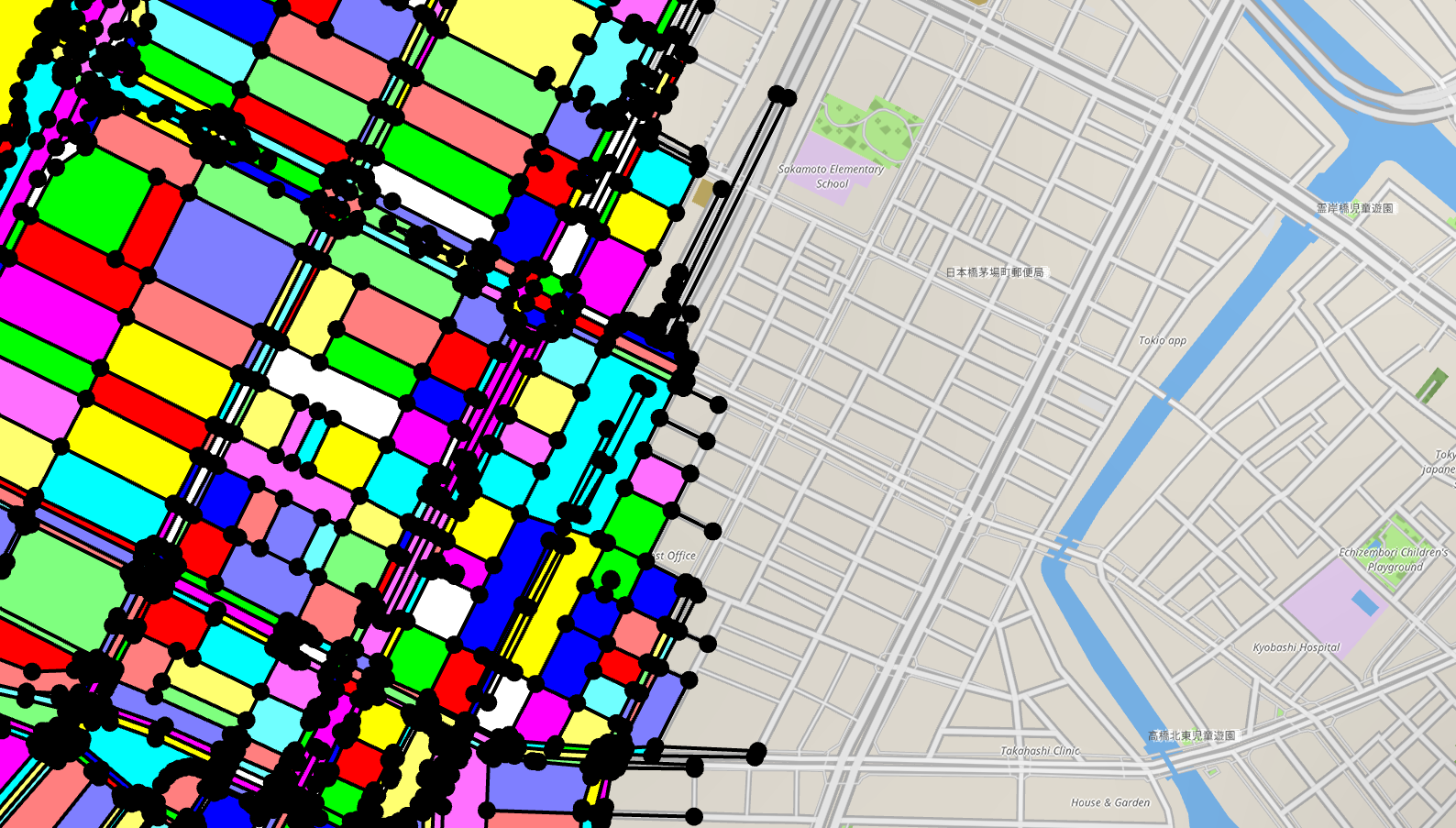

I can now load any LOD 0 level tile and create a connectivity graph of all the streets and roads contained, and then find out all the enclosed polygons. These polygons will be the future parcels that contain the procedural buildings.

The first step of the graph generation is to find all the intersections between all the segments, and I considered using the Bentley–Ottmann algorithm. In the end I discarded this, since I also need to keep the existing polyline connectivity while also building a graph at the same time. My solution, based on a RTree, keeps a rough O(k*n*(log n)) runtime and memory usage, but with a larger k than an optimal algorithm like Bentley–Ottmann would. I’ve clocked it at around 60ms for the most complex tiles in the map after an intense optimization session that improved it by ~65%. This is still a bit too high since there’s no actual building generation happening yet, and it ruins the near-instant loading feeling of the map. Maybe this will be made in a separate pass after the map data is loaded and displayed, or I will find further ways to optimize it.

There’s still a lot of work to before any buildings show up, but I consider this the most difficult step. Plus, having a connectivity for the streets and roads is a very powerful tool for future features.